Quick Answer

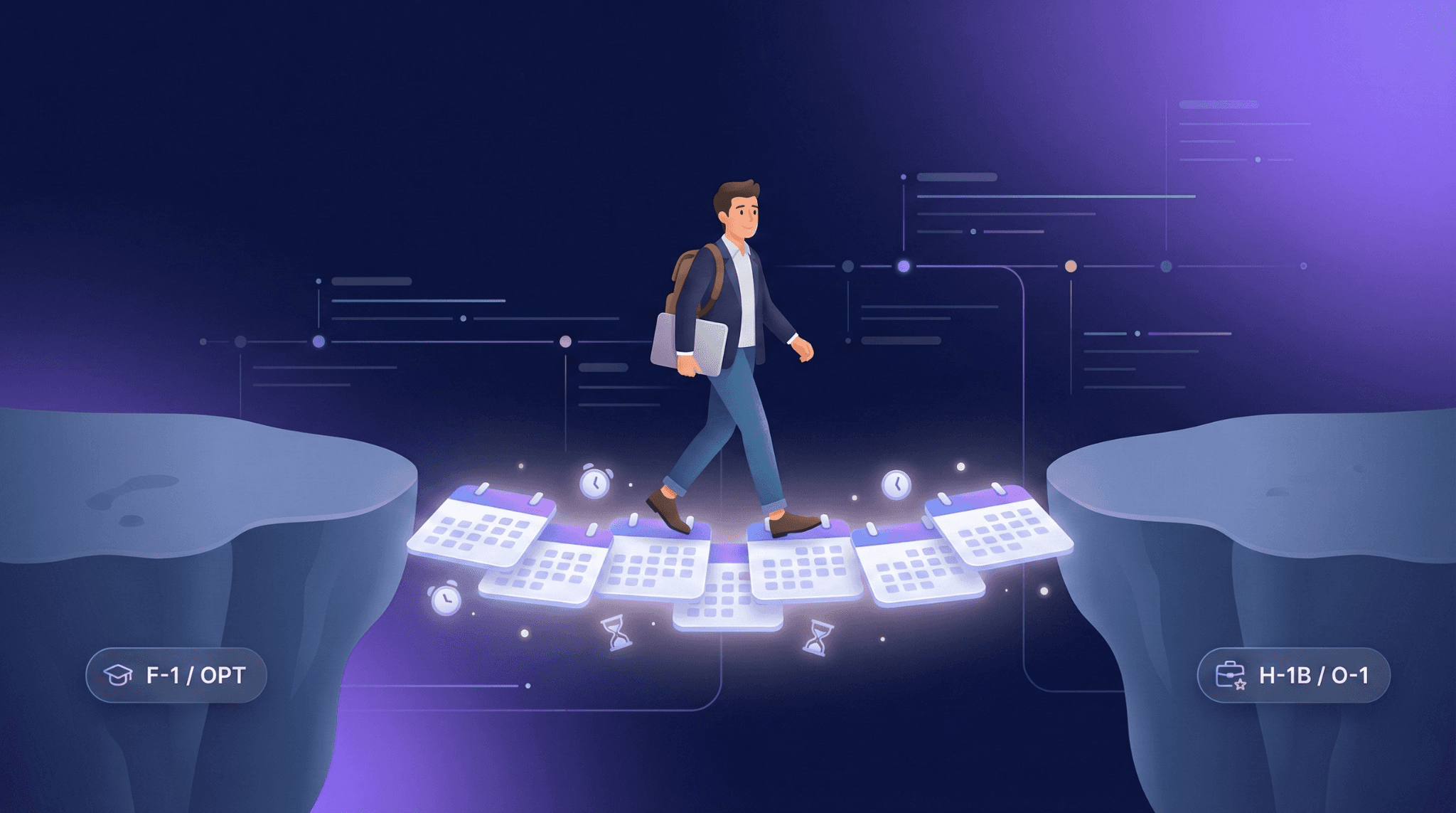

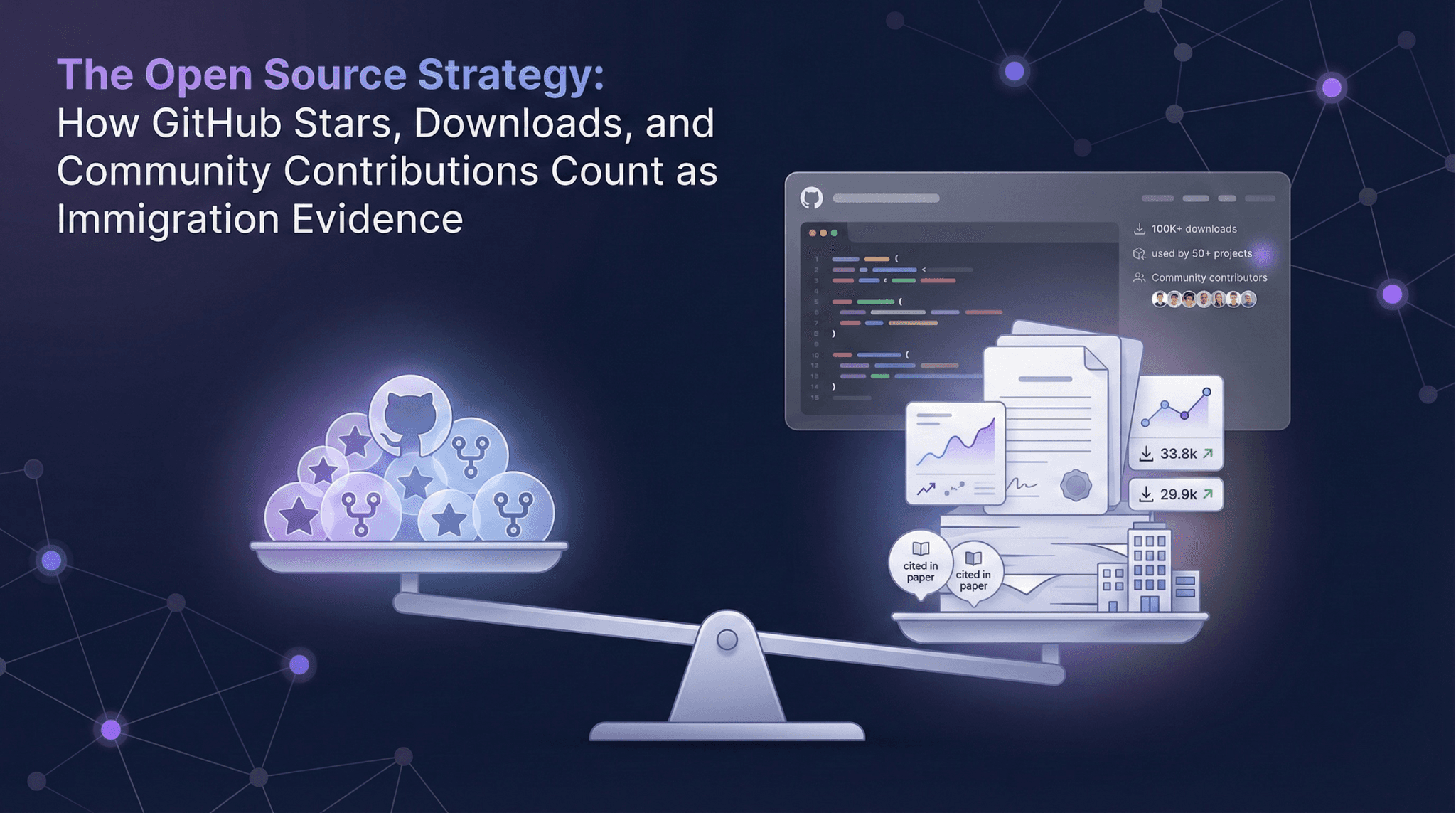

Open source work can satisfy O-1/EB-1A criteria for "original contributions" and "authorship" if properly documented. Key metrics USCIS values: independent adoption by companies/researchers, downloads/usage statistics, citations in academic papers, speaking invitations about your project, and press coverage.

GitHub stars alone are weak; you need evidence of real-world impact like testimonials from users, derivative projects, production deployment, or commercial adoption.

Key Takeaways

Open source can satisfy multiple criteria

Original contributions (Criterion 5), authorship (Criterion 6 if you write technical articles about it), and sometimes judging (if you review PRs for major projects).

Stars and forks aren't enough

USCIS wants evidence of actual usage and impact, not popularity metrics.

Documentation is everything

Screenshots won't work - you need third-party verification, testimonials, and usage statistics.

Conference talks about your project are powerful

Speaking at major tech conferences about your open source work satisfies multiple criteria.

Commercial adoption is the strongest evidence

If companies use your project in production, get letters from them.

Academic citations count more than GitHub stars

If researchers cite your project in papers, that's stronger than 10,000 stars.

Key Takeaways

Open source can satisfy multiple criteria

Original contributions (Criterion 5), authorship (Criterion 6 if you write technical articles about it), and sometimes judging (if you review PRs for major projects).

Stars and forks aren't enough

USCIS wants evidence of actual usage and impact, not popularity metrics.

Documentation is everything

Screenshots won't work - you need third-party verification, testimonials, and usage statistics.

Conference talks about your project are powerful

Speaking at major tech conferences about your open source work satisfies multiple criteria.

Commercial adoption is the strongest evidence

If companies use your project in production, get letters from them.

Academic citations count more than GitHub stars

If researchers cite your project in papers, that's stronger than 10,000 stars.

Table of Content

Which O-1/EB-1A Criteria Open Source Can Satisfy

Criterion 5: Original Contributions of Major Significance

This is the primary criterion for open source work.

What USCIS wants to see:

Your project solved a significant problem in your field

Other developers/companies adopted your solution

Impact can be measured (downloads, dependent projects, production deployments)

Independent experts recognize the contribution's importance

Evidence that works:

Usage statistics (npm downloads, PyPI downloads, Docker pulls)

Dependent projects (X projects depend on your library)

Production deployment (Y companies use it in production)

Academic citations (papers that cite your project)

Testimonial letters from users (especially CTOs, senior engineers at known companies)

Evidence that's weak:

GitHub stars alone

Your own claims about importance

Personal projects with no external users

Criterion 6: Authorship of Scholarly Articles or Technical Publications

How open source satisfies this:

Technical blog posts explaining your project (published on major platforms)

Documentation that's referenced by others

Contributing to official project documentation for major frameworks

Technical papers presented at conferences about your work

Evidence that works:

Blog posts on Medium, Dev.to, HackerNoon with 10K+ views

Official documentation you authored for major projects (React, TensorFlow, etc.)

Technical papers at conferences (ACM, IEEE)

Articles in tech publications (CSS-Tricks, Smashing Magazine)

Criterion 4: Judging (Limited Application)

How open source satisfies this:

Core maintainer of major open source project (reviewing PRs from other contributors)

Member of technical steering committee for major projects

Reviewing pull requests for Apache, Linux Foundation, or other major projects

Evidence that works:

Documentation of PR reviews you've completed

Letters from project maintainers confirming your review role

GitHub activity showing consistent PR reviews for 1+ year

Note: This is weaker than traditional peer review for academic journals. Best used as supporting evidence, not primary criterion.

The Five Levels of Open Source Evidence (From Weakest to Strongest)

Level 1: Personal Project with Few Users (Weak)

What it is: You built something, put it on GitHub, it has 100-500 stars, minimal actual usage.

Why it's weak: No evidence of real-world impact or adoption.

Example: A todo app library with 300 stars but only 5 weekly downloads.

Does this satisfy O-1/EB-1A? No.

Level 2: Popular Project with Usage Metrics (Moderate)

What it is: Your project has significant downloads and usage, but limited documented impact.

Metrics:

10K+ monthly downloads (npm, PyPI)

1,000+ GitHub stars

50+ dependent projects

Why it's moderate: Shows adoption but lacks third-party validation of impact.

Example: A React component library with 15K monthly downloads and 2,000 stars.

Does this satisfy O-1/EB-1A? Possibly, with strong supporting evidence (testimonials, articles about your project).

Level 3: Widely Adopted Project with Testimonials (Strong)

What it is: Your project is used by known companies, with documented testimonials and measurable impact.

Evidence:

100K+ monthly downloads

Used by 5+ recognizable companies

Testimonial letters from CTOs/engineering leaders

Blog posts from companies describing how they use your project

Example: A Python data processing library used by Airbnb, Uber, and Netflix, with 200K monthly downloads.

Does this satisfy O-1/EB-1A? Yes, strongly satisfies "original contributions."

Level 4: Project with Academic or Conference Recognition (Very Strong)

What it is: Your project is cited in academic papers, you've presented about it at major conferences, or it's become a reference implementation.

Evidence:

20+ citations in academic papers

Invited to speak at 3+ major conferences (PyCon, ReactConf, JSConf)

Referenced in textbooks or university courses

Mentioned in technical publications (InfoQ, ACM Queue)

Example: A machine learning framework cited in 50 papers, presented at NeurIPS, and used in Stanford courses.

Does this satisfy O-1/EB-1A? Yes, very strongly. Likely satisfies multiple criteria (original contributions + speaking/judging).

Level 5: Commercially Critical Project (Strongest)

What it is: Your project is critical infrastructure for major companies, with commercial support or enterprise adoption.

Evidence:

Part of major foundation (Apache, CNCF, Linux Foundation)

Used by Fortune 500 companies in production

Commercial support offerings built around your project

Press coverage in mainstream tech media (TechCrunch, Wired, MIT Tech Review)

Example: A database library that became Apache project, used by Google, Facebook, Amazon, with press coverage.

Does this satisfy O-1/EB-1A? Yes, extremely strong case. Likely satisfies 4-5 criteria (original contributions, press coverage, judging, critical role if you're employed by company using it).

How to Document Open Source Work for USCIS

1. Usage Statistics with Context

What to include:

Monthly/weekly download statistics (npm, PyPI, Docker Hub)

Trend graph showing growth over time

Comparison to similar projects (show you're in top 10% by downloads)

Geographic distribution (used in X countries)

How to present:

Screenshot from package registry with date

Export CSV data showing historical trends

Comparative analysis: "My project: 150K monthly downloads. Similar projects: average 25K downloads."

2. Dependent Projects

What to include:

List of projects that depend on yours

Notable users (Airbnb, Stripe, etc.)

Screenshots from GitHub showing "Used by X projects"

How to present:

GitHub dependency graph

List of top 10-20 dependent projects with their stars/usage

Letters from companies explaining how they use your project

3. Testimonial Letters from Users

What makes a strong testimonial:

From CTO, VP Engineering, or Tech Lead at known company

Specific description of how they use your project

Impact metrics: "Reduced processing time by 40%" or "Enabled us to scale to 1M users"

Statement comparing your solution to alternatives

Template language: "I am the CTO of [Company], which serves 5 million users. We evaluated 10 similar libraries and selected [Your Project] because of its performance and reliability. It is now critical infrastructure handling 100M requests/day. Without this project, we would have needed to build custom solution at cost of $500K and 6 months."

4. Academic Citations

What to include:

List of academic papers citing your project

Google Scholar profile showing citations to your project's paper/documentation

Quotes from papers explaining why they used your project

How to find:

Google Scholar: Search for your project name

arXiv: Search project name in CS papers

GitHub: Some projects track citations in README

5. Conference Presentations

What to include:

Invitations to speak about your project

Conference programs showing you as speaker

Video recordings of talks

Audience size and conference prestige

Best conferences:

Language-specific: PyCon, JSConf, RubyConf, Rustconf

Domain-specific: NeurIPS, CVPR (for ML), SIGMOD (for databases)

General tech: DevConf, QCon, O'Reilly conferences

6. Press Coverage

What to include:

Articles about your project in tech media

Being quoted as expert on technology related to your project

Project listed in "Top 10 [Category] Projects" articles

Strong sources:

TechCrunch, Wired, MIT Technology Review

The New Stack, InfoQ, DZone

Official blogs of major companies using your project

Common Mistakes That Weaken Open Source Evidence

Mistake 1: Only Showing GitHub Stats

What people do: Include screenshots of stars, forks, commits.

Why it's weak: USCIS doesn't know what "5,000 stars" means. These are vanity metrics.

Fix: Supplement with usage statistics, testimonials, and real-world impact.

Mistake 2: No Third-Party Validation

What people do: Explain why their project is important in their own words.

Why it's weak: USCIS wants independent verification, not self-promotion.

Fix: Get letters from users, citations from researchers, press coverage from journalists.

Mistake 3: Conflating Popularity with Impact

What people do: Point to high star count as proof of importance.

Why it's weak: Stars don't prove the project is actually used or has impact.

Fix: Show production usage, commercial adoption, or academic citations.

Mistake 4: Not Explaining Technical Significance

What people do: Assume USCIS officer will understand why your project matters.

Why it's weak: Immigration officers aren't software engineers. You need to explain the problem you solved and why it's significant.

Fix: Include expert letters explaining: "Before [Your Project], developers had to [painful process]. This project reduced that from 40 hours to 2 hours, which is why it's been adopted by 500+ companies."

Combining Open Source with Other Evidence

Strategy 1: Open Source + Conference Speaking

Your open source project generates speaking invitations:

Invited to speak at 5 conferences about your project

This satisfies: Original contributions (your project) + Published material about you (you're featured in conference programs) + Possibly judging (if you're on program committee)

Strategy 2: Open Source + Press Coverage

Your project gets press coverage:

Featured in TechCrunch article: "Top 10 Python Libraries of 2024"

Interviewed for Wired article about trends in your technology area

This satisfies: Original contributions (your project) + Press coverage (you're featured)

Strategy 3: Open Source + Critical Role

You work at company that depends on your project:

You're senior engineer at company that uses your open source project

Document how your project is critical to company's infrastructure

This satisfies: Original contributions (your project) + Critical role (your position)

How OpenSphere Evaluates Open Source Evidence

Usage Metrics Analysis:

Input your project's downloads, stars, dependent projects. OpenSphere benchmarks against similar projects: Top 5% in your category? Strong evidence. Top 20%? Moderate. Below 50%? Weak, need testimonials or other evidence.

Citation Tracking:

Import your project's citations from Google Scholar. OpenSphere evaluates whether citation count satisfies "original contributions."

Impact Documentation Checklist:

OpenSphere provides specific checklist: Need 3-5 testimonial letters from companies using your project. Need download statistics + comparative analysis. Need conference speaking evidence or press coverage.

Multi-Criterion Mapping:

OpenSphere shows how your open source work can satisfy multiple criteria: Criterion 5 (project itself), Criterion 6 (articles you wrote about it), Criterion 3 (press coverage), Criterion 4 (PR reviews if applicable).

Open Source Evidence Strength

Evidence Type | Weak | Moderate | Strong |

Stars/Forks | <1,000 stars | 1,000-5,000 stars | 10,000+ stars + usage proof |

Downloads | <1K/month | 10K-50K/month | 100K+ month |

Testimonials | None | Generic praise | Specific impact from known companies |

Citations | 0-5 | 5-20 | 20+ academic citations |

Press | Personal blog only | Tech blogs (Medium, Dev.to) | Major tech media (TechCrunch, Wired) |

Want to know if your open source contributions are strong enough for O-1 or EB-1A - and what additional evidence you need?

Take the OpenSphere evaluation. You'll get open source impact analysis and documentation guidance.

Evaluate Your Open Source Evidence

Which O-1/EB-1A Criteria Open Source Can Satisfy

Criterion 5: Original Contributions of Major Significance

This is the primary criterion for open source work.

What USCIS wants to see:

Your project solved a significant problem in your field

Other developers/companies adopted your solution

Impact can be measured (downloads, dependent projects, production deployments)

Independent experts recognize the contribution's importance

Evidence that works:

Usage statistics (npm downloads, PyPI downloads, Docker pulls)

Dependent projects (X projects depend on your library)

Production deployment (Y companies use it in production)

Academic citations (papers that cite your project)

Testimonial letters from users (especially CTOs, senior engineers at known companies)

Evidence that's weak:

GitHub stars alone

Your own claims about importance

Personal projects with no external users

Criterion 6: Authorship of Scholarly Articles or Technical Publications

How open source satisfies this:

Technical blog posts explaining your project (published on major platforms)

Documentation that's referenced by others

Contributing to official project documentation for major frameworks

Technical papers presented at conferences about your work

Evidence that works:

Blog posts on Medium, Dev.to, HackerNoon with 10K+ views

Official documentation you authored for major projects (React, TensorFlow, etc.)

Technical papers at conferences (ACM, IEEE)

Articles in tech publications (CSS-Tricks, Smashing Magazine)

Criterion 4: Judging (Limited Application)

How open source satisfies this:

Core maintainer of major open source project (reviewing PRs from other contributors)

Member of technical steering committee for major projects

Reviewing pull requests for Apache, Linux Foundation, or other major projects

Evidence that works:

Documentation of PR reviews you've completed

Letters from project maintainers confirming your review role

GitHub activity showing consistent PR reviews for 1+ year

Note: This is weaker than traditional peer review for academic journals. Best used as supporting evidence, not primary criterion.

The Five Levels of Open Source Evidence (From Weakest to Strongest)

Level 1: Personal Project with Few Users (Weak)

What it is: You built something, put it on GitHub, it has 100-500 stars, minimal actual usage.

Why it's weak: No evidence of real-world impact or adoption.

Example: A todo app library with 300 stars but only 5 weekly downloads.

Does this satisfy O-1/EB-1A? No.

Level 2: Popular Project with Usage Metrics (Moderate)

What it is: Your project has significant downloads and usage, but limited documented impact.

Metrics:

10K+ monthly downloads (npm, PyPI)

1,000+ GitHub stars

50+ dependent projects

Why it's moderate: Shows adoption but lacks third-party validation of impact.

Example: A React component library with 15K monthly downloads and 2,000 stars.

Does this satisfy O-1/EB-1A? Possibly, with strong supporting evidence (testimonials, articles about your project).

Level 3: Widely Adopted Project with Testimonials (Strong)

What it is: Your project is used by known companies, with documented testimonials and measurable impact.

Evidence:

100K+ monthly downloads

Used by 5+ recognizable companies

Testimonial letters from CTOs/engineering leaders

Blog posts from companies describing how they use your project

Example: A Python data processing library used by Airbnb, Uber, and Netflix, with 200K monthly downloads.

Does this satisfy O-1/EB-1A? Yes, strongly satisfies "original contributions."

Level 4: Project with Academic or Conference Recognition (Very Strong)

What it is: Your project is cited in academic papers, you've presented about it at major conferences, or it's become a reference implementation.

Evidence:

20+ citations in academic papers

Invited to speak at 3+ major conferences (PyCon, ReactConf, JSConf)

Referenced in textbooks or university courses

Mentioned in technical publications (InfoQ, ACM Queue)

Example: A machine learning framework cited in 50 papers, presented at NeurIPS, and used in Stanford courses.

Does this satisfy O-1/EB-1A? Yes, very strongly. Likely satisfies multiple criteria (original contributions + speaking/judging).

Level 5: Commercially Critical Project (Strongest)

What it is: Your project is critical infrastructure for major companies, with commercial support or enterprise adoption.

Evidence:

Part of major foundation (Apache, CNCF, Linux Foundation)

Used by Fortune 500 companies in production

Commercial support offerings built around your project

Press coverage in mainstream tech media (TechCrunch, Wired, MIT Tech Review)

Example: A database library that became Apache project, used by Google, Facebook, Amazon, with press coverage.

Does this satisfy O-1/EB-1A? Yes, extremely strong case. Likely satisfies 4-5 criteria (original contributions, press coverage, judging, critical role if you're employed by company using it).

How to Document Open Source Work for USCIS

1. Usage Statistics with Context

What to include:

Monthly/weekly download statistics (npm, PyPI, Docker Hub)

Trend graph showing growth over time

Comparison to similar projects (show you're in top 10% by downloads)

Geographic distribution (used in X countries)

How to present:

Screenshot from package registry with date

Export CSV data showing historical trends

Comparative analysis: "My project: 150K monthly downloads. Similar projects: average 25K downloads."

2. Dependent Projects

What to include:

List of projects that depend on yours

Notable users (Airbnb, Stripe, etc.)

Screenshots from GitHub showing "Used by X projects"

How to present:

GitHub dependency graph

List of top 10-20 dependent projects with their stars/usage

Letters from companies explaining how they use your project

3. Testimonial Letters from Users

What makes a strong testimonial:

From CTO, VP Engineering, or Tech Lead at known company

Specific description of how they use your project

Impact metrics: "Reduced processing time by 40%" or "Enabled us to scale to 1M users"

Statement comparing your solution to alternatives

Template language: "I am the CTO of [Company], which serves 5 million users. We evaluated 10 similar libraries and selected [Your Project] because of its performance and reliability. It is now critical infrastructure handling 100M requests/day. Without this project, we would have needed to build custom solution at cost of $500K and 6 months."

4. Academic Citations

What to include:

List of academic papers citing your project

Google Scholar profile showing citations to your project's paper/documentation

Quotes from papers explaining why they used your project

How to find:

Google Scholar: Search for your project name

arXiv: Search project name in CS papers

GitHub: Some projects track citations in README

5. Conference Presentations

What to include:

Invitations to speak about your project

Conference programs showing you as speaker

Video recordings of talks

Audience size and conference prestige

Best conferences:

Language-specific: PyCon, JSConf, RubyConf, Rustconf

Domain-specific: NeurIPS, CVPR (for ML), SIGMOD (for databases)

General tech: DevConf, QCon, O'Reilly conferences

6. Press Coverage

What to include:

Articles about your project in tech media

Being quoted as expert on technology related to your project

Project listed in "Top 10 [Category] Projects" articles

Strong sources:

TechCrunch, Wired, MIT Technology Review

The New Stack, InfoQ, DZone

Official blogs of major companies using your project

Common Mistakes That Weaken Open Source Evidence

Mistake 1: Only Showing GitHub Stats

What people do: Include screenshots of stars, forks, commits.

Why it's weak: USCIS doesn't know what "5,000 stars" means. These are vanity metrics.

Fix: Supplement with usage statistics, testimonials, and real-world impact.

Mistake 2: No Third-Party Validation

What people do: Explain why their project is important in their own words.

Why it's weak: USCIS wants independent verification, not self-promotion.

Fix: Get letters from users, citations from researchers, press coverage from journalists.

Mistake 3: Conflating Popularity with Impact

What people do: Point to high star count as proof of importance.

Why it's weak: Stars don't prove the project is actually used or has impact.

Fix: Show production usage, commercial adoption, or academic citations.

Mistake 4: Not Explaining Technical Significance

What people do: Assume USCIS officer will understand why your project matters.

Why it's weak: Immigration officers aren't software engineers. You need to explain the problem you solved and why it's significant.

Fix: Include expert letters explaining: "Before [Your Project], developers had to [painful process]. This project reduced that from 40 hours to 2 hours, which is why it's been adopted by 500+ companies."

Combining Open Source with Other Evidence

Strategy 1: Open Source + Conference Speaking

Your open source project generates speaking invitations:

Invited to speak at 5 conferences about your project

This satisfies: Original contributions (your project) + Published material about you (you're featured in conference programs) + Possibly judging (if you're on program committee)

Strategy 2: Open Source + Press Coverage

Your project gets press coverage:

Featured in TechCrunch article: "Top 10 Python Libraries of 2024"

Interviewed for Wired article about trends in your technology area

This satisfies: Original contributions (your project) + Press coverage (you're featured)

Strategy 3: Open Source + Critical Role

You work at company that depends on your project:

You're senior engineer at company that uses your open source project

Document how your project is critical to company's infrastructure

This satisfies: Original contributions (your project) + Critical role (your position)

How OpenSphere Evaluates Open Source Evidence

Usage Metrics Analysis:

Input your project's downloads, stars, dependent projects. OpenSphere benchmarks against similar projects: Top 5% in your category? Strong evidence. Top 20%? Moderate. Below 50%? Weak, need testimonials or other evidence.

Citation Tracking:

Import your project's citations from Google Scholar. OpenSphere evaluates whether citation count satisfies "original contributions."

Impact Documentation Checklist:

OpenSphere provides specific checklist: Need 3-5 testimonial letters from companies using your project. Need download statistics + comparative analysis. Need conference speaking evidence or press coverage.

Multi-Criterion Mapping:

OpenSphere shows how your open source work can satisfy multiple criteria: Criterion 5 (project itself), Criterion 6 (articles you wrote about it), Criterion 3 (press coverage), Criterion 4 (PR reviews if applicable).

Open Source Evidence Strength

Evidence Type | Weak | Moderate | Strong |

Stars/Forks | <1,000 stars | 1,000-5,000 stars | 10,000+ stars + usage proof |

Downloads | <1K/month | 10K-50K/month | 100K+ month |

Testimonials | None | Generic praise | Specific impact from known companies |

Citations | 0-5 | 5-20 | 20+ academic citations |

Press | Personal blog only | Tech blogs (Medium, Dev.to) | Major tech media (TechCrunch, Wired) |

Want to know if your open source contributions are strong enough for O-1 or EB-1A - and what additional evidence you need?

Take the OpenSphere evaluation. You'll get open source impact analysis and documentation guidance.

Evaluate Your Open Source Evidence

1. How many GitHub stars do I need for O-1?

There's no threshold. Stars alone don't satisfy criteria—you need evidence of actual usage and impact.

2. Can I use open source as my only evidence?

Risky. Best to combine with other criteria (press, speaking, judging, awards).

3. What if my project is used by big companies but I can't name them?

Get generic letters: "I am CTO of a Fortune 500 company. We use [Your Project] in production serving 10M users."

4. Do contributions to others' projects count?

Yes, if significant (core maintainer, major features). But your own projects are stronger.

5. How do I prove download statistics?

Screenshot from npm, PyPI, Docker Hub, or wherever your project is hosted. Include date and URL.

6. What if my project is open source but hosted privately?

For immigration purposes, publicly visible projects are much stronger. Private = no independent verification.

7. Can I count closed-source work?

Only if employer provides detailed letter explaining impact. Open source has advantage of public verification.

8. Do I need to be the sole creator?

No, but you need to show you made significant contributions. Co-creating with 1-2 others is fine. Being 1 of 50 contributors is weak.

9. How long does a project need to exist to count?

No specific requirement, but evidence of sustained impact (1+ years of usage) is stronger than newly launched project.

10. What if my project was influential but is now archived?

Past impact counts. Document peak usage, citations, and legacy (what projects replaced it or built on it).

Table of Contents

Quick Resources